In today’s data-driven world, relational databases are no longer sufficient to handle every type of data, particularly unstructured data like text, images, audio, and more. This is where vector databases come into play.

In this article, we’ll explore

- Why vector databases are essential

- Limitations of relational databases in managing unstructured data

- How vector databases operate

- Use Cases of Vector Databases

Limitations of relational databases in managing unstructured data

Structure is a Limitation

Relational databases rely on predefined schemas. They are good for transactional systems and analytical tasks involving structured data like sales records or user demographics. However, they falter when dealing with:

- Irregular formats

- Non-tabular relationships

- High-dimensional data, like feature vectors (embeddings)

Poor Performance for Similarity Search

Relational databases are optimized for exact matches, aggregations, and joins. Not optimized for similarity searches. For example:

- Searching for “documents similar to this one” or “images close in style” is computationally expensive.

- Storing feature embeddings in RDBMS tables results in poor performance because such databases lack the necessary indexing techniques for high-dimensional data.

Inability to Support AI Features

Relational databases don’t work well with AI systems. They can’t easily handle or organize vector data (embeddings), which is essential for modern AI tools like recommendations, search engines, and anomaly detection.

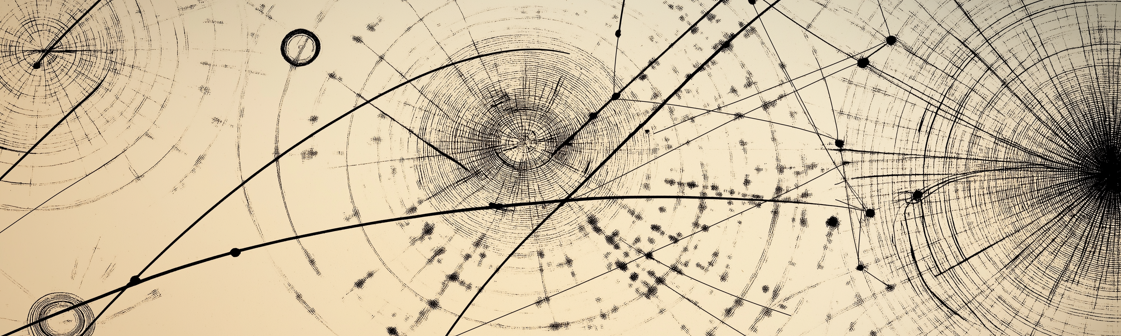

How Vector Databases Work

Role of vectors in AI

AI models often transform unstructured data into fixed-size vector embeddings. These embeddings are mathematical representations that capture the essence of the data.

For example:

- A sentence embedding may encode semantic meaning

- An image embedding may represent shapes, colors, or textures

- These embeddings are high-dimensional vectors (e.g., 512-dimensional) that enable similarity-based comparisons

Vector Indexing

Vector databases use specialized data structures like:

- KD-Trees: Efficient for low-dimensional data but less effective for higher dimensions

- Approximate Nearest Neighbor (ANN): Algorithms like HNSW (Hierarchical Navigable Small World) optimize search for high-dimensional spaces, making querying faster

These indexing methods ensure rapid similarity searches, enabling applications like facial recognition, document retrieval, and personalized recommendations.

Querying in a Vector Database

When a query is submitted, it’s first converted into an embedding vector (e.g., through a language or vision model). The database then compares this query vector to existing embeddings using distance metrics like:

- Cosine Similarity

- Euclidean Distance

- Dot Product

The most similar vectors are returned as results, allowing highly relevant retrieval even for complex unstructured data.

Use Cases of Vector Databases

Vector databases have unlocked potential across diverse industries:

- E-commerce: Image-based product searches and personalized recommendations

- Healthcare: Anomaly detection in medical imaging and patient history retrieval

- Media and Entertainment: Content-based search for videos, music, and text

- Enterprise Search: Querying vast repositories of unstructured documents for relevant information