In today’s AI-driven world, Large Language Models (LLMs) are powering everything from customer service chatbots to content creation tools.

But with this power comes complexity, how do you ensure your LLM applications are reliable, accurate, and performant? Enter LangSmith, a game-changing tool from the team at LangChain.

Why Every LLM Developer Needs LangSmith

If you’ve ever struggled with unpredictable LLM outputs, mysterious errors in your AI workflows, or simply wondered how to systematically improve your prompts, LangSmith was built for you. This comprehensive toolkit addresses the unique challenges of building with LLMs by providing robust testing, debugging, and monitoring capabilities.

Here are the Ways LangSmith Transforms LLM Development

Test with Confidence

With LangSmith, you can create structured test cases that ensure your AI responses remain consistent and accurate. No more hoping your model performs well, now you can prove it across different scenarios and datasets.

Debug

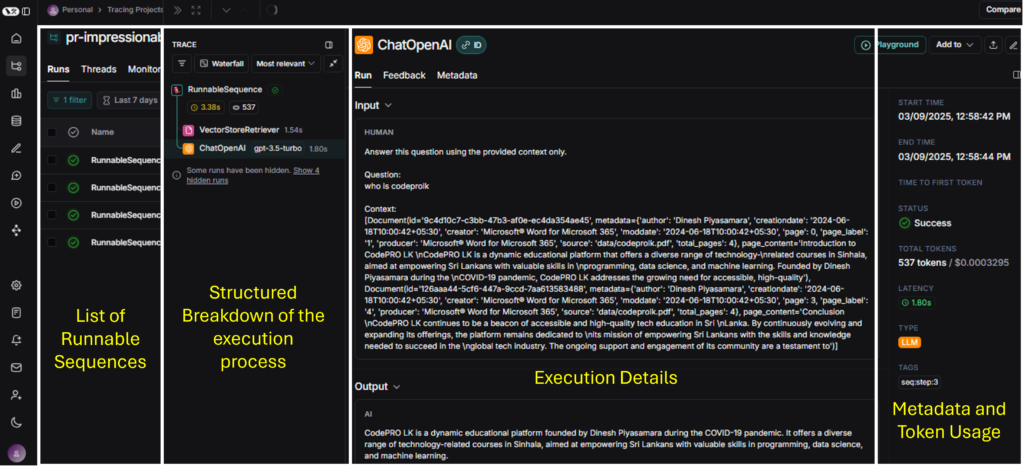

LangSmith’s tracing capabilities are a revelation for developers. You can visualize each step in your LLM workflow, easily identifying bottlenecks or errors.

Monitor in Production

Once deployed, your LLM application deserves continuous attention. LangSmith tracks real user interactions, response times, and any unexpected behaviors, allowing you to catch issues before users do.

Real-World Applications

LangSmith shines across various use cases:

- Chatbots and Virtual Assistants: Ensure consistent, accurate responses even in complex conversations

- RAG Systems: Optimize both retrieval accuracy and response generation

- Content Generation: Refine outputs and minimize hallucinations

- High-Stakes Applications: Maintain compliance and reliability in finance, healthcare, and legal AI

Conclusion

As LLMs become central to modern applications, tools like LangSmith are essential for developers who want to build with confidence. By providing visibility, control, and systematic improvement capabilities, LangSmith helps ensure your AI applications remain robust and reliable in production.

Whether you’re debugging complex workflows, optimizing prompts, or monitoring deployed systems, LangSmith gives you the insights needed to take your LLM applications from experimental to exceptional.